Michigan Muse Winter 2023 > Performing Arts Technology Department

Expanding Horizons: A Look Inside the Performing Arts Technology Department

By Judy Galens

While every department in the School of Music, Theatre & Dance explores the ways technology can enhance or facilitate scholarship and the performing arts, one department sits squarely at the intersection of technology and performance.

The Department of Performing Arts Technology is wholly devoted to and immersed in the exploration of technology, combining the study of music and engineering. Its undergraduate students can explore a variety of paths within the department, pursuing a BFA in performing arts technology, a BTA in music & technology, or a BS in sound engineering; graduate students can seek an MA in media arts and, as of 2022–23, a PhD in performing arts technology.

The PAT faculty also explore a range of disciplines, from musical performance and composition to sound engineering, filmmaking, and experimental research.

At any given moment, the students and faculty in the PAT department are engaged in an astounding variety of research activities, experiments, projects, and initiatives designed to deepen their field, make new discoveries, and traverse new frontiers. The following explore just a sampling of these endeavors.

Music and Technology Camp Takes Aim at the Issue of Gender Imbalance

The field of music technology has long been male dominated. A 2022 report on the music industry from the USC Annenberg Inclusion Initiative found that, over a 10-year period (2012–21), male producers outnumbered female producers 35 to 1. One possible way to remedy this gender imbalance is to increase the number of women graduating with degrees in the field. But what to do when academic programs also struggle with gender imbalance?

In 2017, Michael Gurevich, associate professor of music and then the chair of SMTD’s Department of Performing Arts Technology (PAT), joined with other PAT faculty to try to answer that question; they participated in a program called Faculty Leading Change, sponsored by the ADVANCE Program at U-M. “After reviewing relevant research and engaging in community conversations and focus groups with our students,” Gurevich said, “we found that the imbalance was happening much earlier in our students’ lives than the time they get to college. In other words, girls in middle and high school weren’t seeing music technology as a field of study for them.”

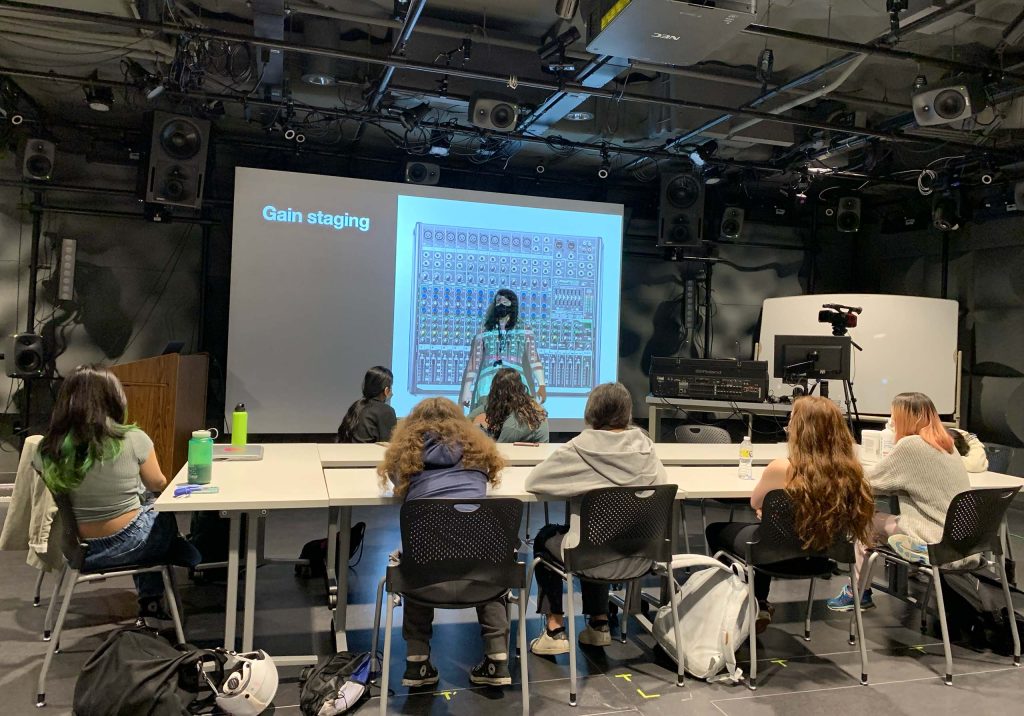

That very summer, the PAT department took a significant step forward in helping girls see music technology as a viable career option: the department held the first Girls in Music & Technology (GiMaT) camp, part of SMTD’s MPulse summer program. One goal of the program, Gurevich explained, is to engage students by giving them firsthand experience: “We wanted to bring them to our facilities so that they could see themselves working in this environment.” The program also, he noted, “makes them better prepared and more competitive for admission to the PAT program, which is highly selective.”

The two-week camp, which is open to all but focuses on encouraging girls’ participation in music technology, covers a broad range of topics, including electronic music performance and composition, music production, computer programming, and lighting design. The faculty running the camp also invite guest artists and researchers to speak to students about their experiences. The two weeks of hands-on learning for GiMaT students results in two works in their portfolios, an essential step for those interested in applying to university degree programs in performing arts technology.

For the students who attend GiMaT, music is already a big part of their lives, and most are considering a career in the field. Some have even tried their hand at music production. “But not all of them come in with a great deal of experience in music technology,” noted GiMaT director Zeynep Özcan, lecturer in the PAT department, “so they may not immediately have a good sense of the diversity of career opportunities that music technology can offer. That’s why our curriculum is designed to cover a broad range of topics and get them exposed to the breadth of opportunities in our field.”

After the first GiMaT in 2017, the makeup of the PAT department began to change almost immediately. “We’ve had quite a number of campers come back and apply for studies in our department,” noted Özcan, who has run GiMaT since 2020. “This, combined with the increased efforts towards diversity in our field, has had a positive impact on the student composition in our department.”

Claire Niedermaier is one of the many young women whose path was altered by attending GiMaT. “Before GiMaT, I knew I wanted to study engineering in college, but I wanted to find a way to combine my interests of math, science, technology, and music. GiMaT introduced me to what the fields of sound engineering and music technology have to offer,” she said.

After her GiMaT experience (“honestly some of the best weeks of my life”), Claire applied and was accepted to the PAT program at SMTD. She is now a junior, pursuing a BS in sound engineering. “Without GiMaT,” she said, “I do not think I would be in SMTD studying PAT. GiMaT allowed me to explore what PAT has to offer and provided me with resources to become more involved.”

The summer after her first year at SMTD, Claire was invited to be a student teacher at GiMaT. She taught a lesson on the history of electronic music and women in the field, and she helped the students with their projects. “It was an incredibly rewarding experience to be able to teach and mentor students, since my student teachers when I attended GiMaT were so supportive and inspiring to me,” she shared. She had an important message to convey to the girls she worked with that summer: “It’s incredibly important for them to know that they belong in a field like music technology, and they have every right to take up space and make their voices heard.”

For the PAT faculty, it’s been gratifying to see the positive effect GiMaT has had on the department and its students. Acknowledging that the field of music technology still has a long way to go to improve diversity in its ranks, Özcan noted that “GiMaT has certainly been a strong step in the right direction.” A composer, educator, and author, Özcan recognizes the impact of key music technology experiences in her own life and career, and she is dedicated to providing others with such life-changing exposure. “Getting introduced to music technology early on in my career as an artist has changed my perspective towards music in a fundamental way,” she said. “The capacity of this camp to evoke a similar realization in an aspiring artist means the world to me.”

Bringing the Audience into a Virtual Reality Performance

While recent technological advances make it easier than ever for musicians to share their work with audiences in virtual ways, each advance seems to bring with it a pool of new challenges. With the support of the Arts Initiative – a university-wide program seeking to place the arts at the center of research, teaching, and community engagement at U-M – an SMTD team led by Anıl Çamcı, assistant professor in the Department of Performing Arts Technology (PAT), is diving head-first into that pool, seeking innovative solutions to make virtual experiences more entertaining and engaging.

As part of its mission, the Arts Initiative offers funding for arts-centered research and creative projects. Çamcı’s project, Bringing Down the Fifth Wall: A System for Delivering VR Performances to Large Audiences, is among the initiative’s pilot projects launched during its startup phase.

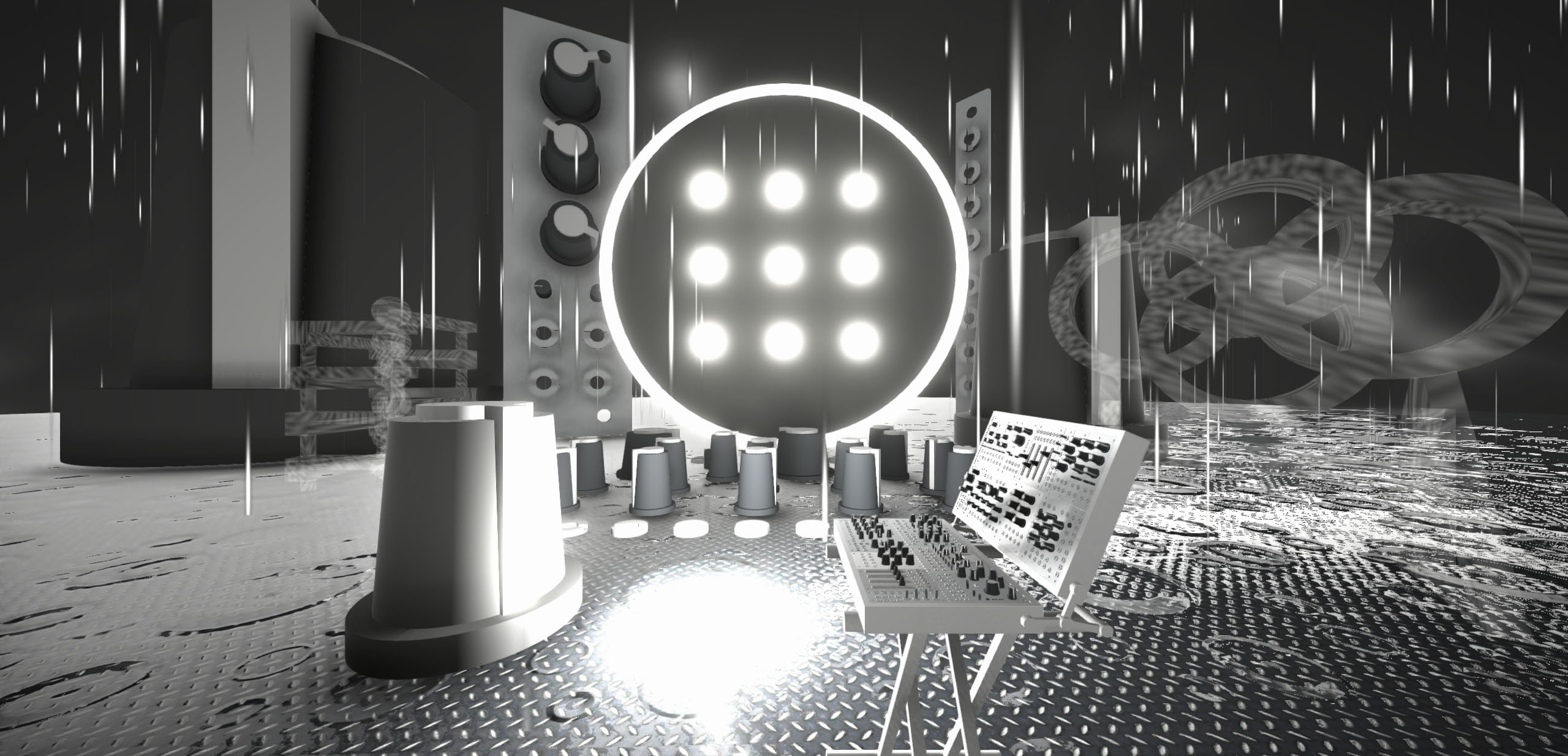

Çamcı has done extensive research on the issues associated with presenting virtual reality (VR) performances to large audiences. His goal is to help artists create VR performances that take full advantage of the promise of the medium, shedding the constraints imposed by physical reality. In contrast to a consumer-facing application in which a user would enter a VR environment and play with musical instruments in the style of a game, for this experience a musician performs in a simulated environment, with VR instruments, in front of an actual audience – whether in person or on a video-conferencing platform like Zoom.

As Çamcı explained in an episode of the Michigan Minds podcast, “When I mention a VR instrument, I don’t mean like a virtual violin that you would play in simulated space. I think that does injustice to both violin performance and VR. What I mean instead are instruments that would otherwise be impossible to implement. Maybe this is an instrument that defies gravity or laws of physics. Maybe it’s an instrument where your body can pass through the instrument, or maybe it’s an instrument that is blown up to the scale of a planet. So these would be instances where the use of the virtual medium in a musical application is truly justified.”

With current VR technology, bringing an entire concert audience into VR along with the performer is not feasible. Providing a VR headset to each member of a large concert audience, for example, would not be cost effective and can cause issues with sanitation. The challenge, then, is how to show the audience what goes on in a VR environment in a way that is feasible for the musician and enjoyable for the audience.

There are a few existing ways to share a musician’s virtual performance with others, but each method has its obstacles. One frequently taken approach is to show the audience the feed from the performer’s headset by projecting it on a screen. But this method, Çamcı pointed out, “burdens the musician with another layer of performance – in addition to playing their instrument, they now have to curate what’s being shown to the audience.” In addition, that method captures every minute head movement the musician makes and amplifies these movements on the screen, making for a jittery representation of the virtual world.

Another option for showing VR performances in a concert setting is to set up virtual cameras in VR and show the feed from those cameras to the audience. This method, however, is cumbersome and requires detailed customizations for each concert space.

Removing Obstacles to VR Performance

After confronting these obstacles in his own performances and those of other musicians, Çamcı began working on an interactive virtual cinematographer system that would capture the best of existing approaches without creating undue burdens for the musician. With the support of the Arts Initiative, and working with students from SMTD, the School of Engineering, and the School of Information, he began developing this system.

The system offers virtual implementations of common cinematography and camera movement techniques, such as zoom, dolly, and panning cameras, as well as first-person and third-person cameras. More importantly, the system is configured and controlled, Çamcı said, “based on a) the music that is being performed in real time, and b) the way the performer acts or moves and behaves in VR. That way it creates somewhat of a filmic representation of what’s going on in VR.”

The technology the team developed uses real-time analysis of the music to control the camera system. The result includes some visual effects that naturally come to mind when thinking about the relationship between music and cinematography, such as visuals that fade in or out along with the music, and cuts between cameras that mimic the rhythm of the performance. But this system goes further, analyzing the gaze, attention, and interactions of the performer. “We have a rolling list of objects that the performer might be paying attention to at any given time,” said Çamcı. “When a certain attention threshold is crossed, the camera system picks up on the objects that the performer is engaging with” and shows the audience those objects.

The result is an engaging performance that takes place in an imagined world but reflects the reality of the performers’ movements, their reactions to the music, and the visual objects that they are drawn to. And because this system doesn’t require a cumbersome, complicated setup ahead of time, it becomes far more accessible, enabling more artists to make use of VR in their performances.

Future Plans

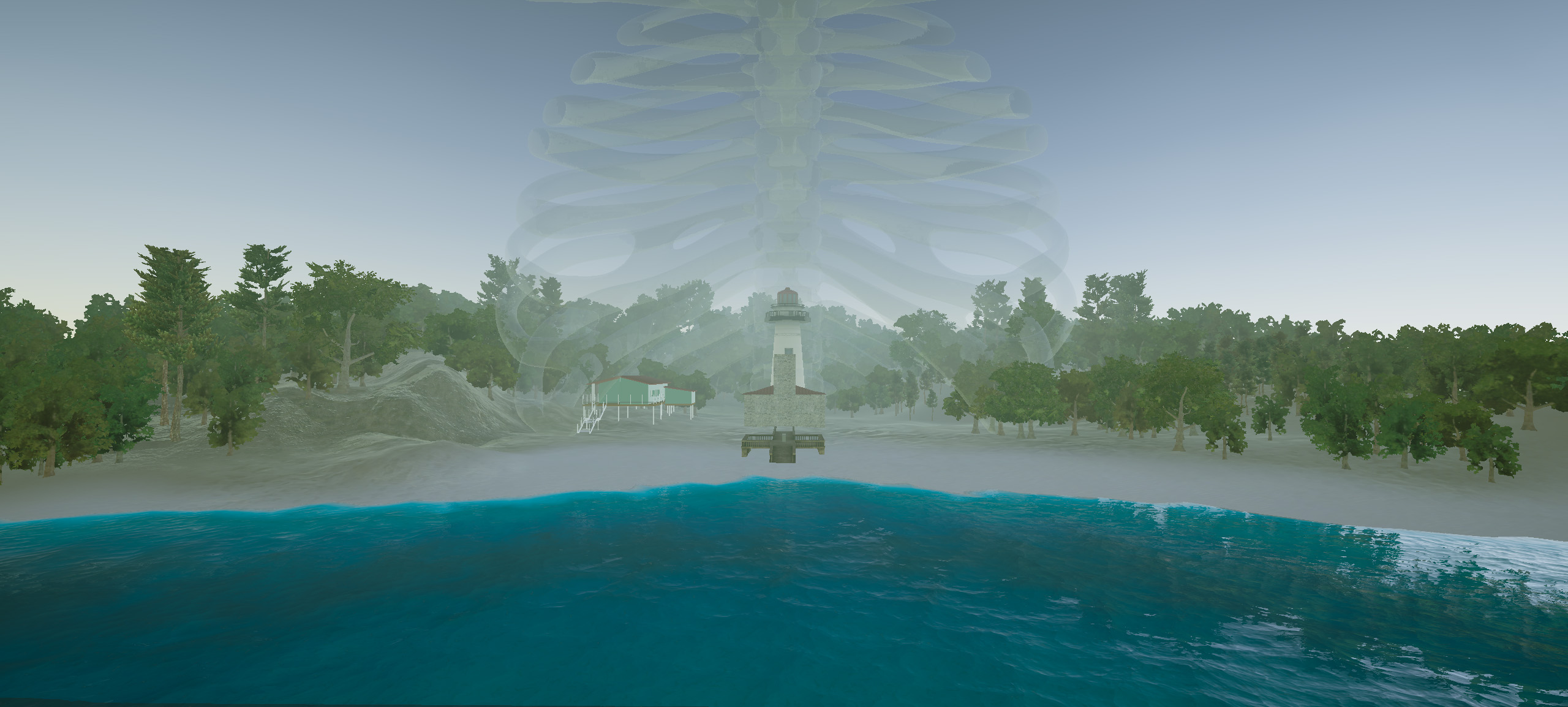

The team is currently working on the development of several VR performance pieces to evaluate and showcase the system, including Çamcı performing in VR with a hardware modular synthesizer and a networked VR piece with his former student, Matias Vilaplana (MA ’19, media arts), who will join the performance remotely from Charlottesville, Virginia, while Çamcı is in Ann Arbor. Additional performances will involve Çamcı’s SMTD collaborators: Erik Santos, associate professor and chair of composition, will perform one of his pieces for vocals, guitar, and harmonica in VR. And Amy Porter, professor of music, will perform Bardo, a piece for flute and electronics composed by Santos, within a virtual reality inspired by Whitefish Point in Michigan’s Upper Peninsula.

Future plans for the technology include further developing its applications for musicians who wish to collaborate but are not co-located. In addition, as the project developed, the team discovered educational applications. For example, Çamcı explained, in his immersive media class, there are times when he needs to go into VR to demonstrate a concept or technique. At those moments, he noted, “I become the performer in VR and my students become the audience outside of VR. So this system will therefore support instructional activities to showcase virtual environments to student cohorts in a dynamic way and from multiple angles.”

Çamcı cited this project as an example of how technology can serve artistic expression, while at the same time, the arts can encourage and promote the need for new technology. “We can sometimes arrive at the misconception that technology serves and elevates art in a unidirectional manner,” he said, “but art often elevates technology in return. There’s an inherent reciprocity between the two.”

Subtle but Important Gestures: Using Robots to Convey the Movements of Musicians

Whenever two or more musicians perform together, they communicate with each other wordlessly, through subtle movements and gestures. This communication is not only vital for the musicians; it is also an important part of the experience for the audience.

But what happens when musicians can’t be together on a stage while they perform? The onset of the pandemic, and the subsequent need for musicians to perform together virtually rather than physically, laid bare the limitations of video conferencing applications like Zoom.

First, there’s the delay. As explained by Michael Gurevich, associate professor in the Department of Performing Arts Technology (PAT), “Zoom itself is not really good for playing music because the underlying audio technology of Zoom adds a bunch of delay into the transmission. It does that so that our image and sound look synchronized.” If musicians in two separate locales wanted to perform together and only transmit audio, specialized audio software that eliminates much of the delay makes that possible. “Musicians halfway across the continent can actually play music together, and it feels like they’re in the same space,” said Gurevich. “It can be tightly, rhythmically synchronized.” But once video is introduced, that synchronization is lost.

Another limitation of video is that it simply isn’t as engaging as sitting in the same room with performers. “Research shows that, in all kinds of different settings, watching someone on a two-dimensional flat video screen is not as compelling or persuasive or visually interesting as seeing something three-dimensional moving in space with you,” Gurevich said.

With their project Visualizing Telematic Performance, Gurevich, John Granzow (associate professor in the PAT department), and Matt Albert (associate professor and chair of the Department of Chamber Music), have been exploring ways to incorporate a visual component and convey the physical presence of musicians – for the benefit of the other performers as well as for the audience – when the players are not co-located.

The project has been supported by both the Arts Initiative, a university-wide program that seeks to place the arts at the center of research, teaching, and community engagement at U-M, and FEAST, which stands for Faculty Engineering/Arts Student Teams. In partnership with the Multidisciplinary Design Program, FEAST is a program of ArtsEngine, an interdisciplinary initiative of U-M’s North Campus schools and colleges.

Creating a Musician’s Avatar

Telematic music refers to a field of research and practice exploring the various implications of musical performance by people who are separated geographically. Beginning in January 2021, the researchers on this project began to examine ways to create three-dimensional objects that would be on a stage with performers, representing the movements of other performers in a different location. The researchers refer to these objects as robots, or mechanical avatars, or kinetic sculptures. “Part of why we haven’t settled on this term,” Gurevich explained, “is because it actually functions in different ways at different times. Sometimes it really feels like it’s the musician, and sometimes it feels like it’s another person, and sometimes it feels like something much more abstract.”

Regardless of the term used to describe these three-dimensional objects, their essential function is to move in space alongside the musician on stage, reflecting the movements of the performer in the other location. The musician’s body movements are captured through the use of an infrared motion capture system, specifically a suit worn by the musician with reflective tracking dots on the joints and limbs that record the performer’s movements. The team then uses custom software that they wrote to translate the performer’s movements into instructions for the robots.

Thanks to the technologically rich environment of the Chip Davis Studio, centerpiece of the Brehm Technology Suite in the Earl V. Moore Building, the PAT department has access to a motion capture system similar to what’s commonly used in computer animation. “We’re using it in a very different way from what’s done in animation,” Gurevich pointed out, “in that our system is actually able to stream the location data of all the markers live in real time, so we can get a very, very accurate depiction of the performer’s movements, live, with very little delay.”

The robots were largely created by the students working on the project, including Adam Schmidt, who is currently pursuing an MA in media arts, after graduating in 2022 with dual degrees in sound engineering and electrical engineering. Drawn to the team primarily for the robotics aspect, Schmidt used earlier versions of the team’s robotic creations as inspiration when designing and building the most recent version. He explained that the process involved analyzing musicians’ myriad movements, and then whittling them down: “We can’t use all of their motion data when mapping their motion to the robot because there is simply too much. We had to really question what movements, no matter how big or small, were the most important to communicate musical ideas, such as phrasing and cues, and pare it down to just a few data points.”

A Culminating Performance

In early December 2022, the team held an event that culminated the year-long Arts Initiative project: a telematic concert held simultaneously in Ann Arbor and in Charlottesville, at the University of Virginia (UVA). Their collaborators in the McIntire Department of Music at UVA included I-Jen Fang, associate professor of percussion; Luke Dahl, associate professor of composition & computer technologies; and PhD student Matias Vilaplana. Both Dahl and Vilaplana are also U-M alumni: Dahl earned a bachelor’s degree in electrical engineering in 1996, and Vilaplana earned a master’s degree in media arts from SMTD in 2019. Performing in Ann Arbor were Matt Albert and two SMTD students, Sui Lin Tam (BM ’23, percussion) and Gavin Ryan, who is simultaneously pursuing an MA in media arts and a DMA in percussion.

For the event, the UVA team had a performer and one of the U-M team’s robots in Charlottesville. The U-M team had performers and a robot in Ann Arbor. In each location, audiences witnessed a series of live duets between a musician in Ann Arbor and one in Charlottesville (both wearing motion capture suits) as well as a robot whose movements reflected those of the musician in the distant locale. “And it all worked somehow,” said Gurevich. “It was kind of a wow thing, unlike anything anyone had seen before. I don’t know of anyone else who’s done anything like this.”

In preparing for this performance, one interesting problem to be solved was how to communicate when the piece should begin. Without a conductor, chamber musicians rely on expressive gestures to synchronize the start of the piece. “We spent a lot of the rehearsals figuring out how to cue the beginning of the piece, and what the robot should do when that happens, and how we can capture that or learn it,” said Gurevich. “We found out that, as long as it was consistent, that’s what the performers cared about.”

The team also noticed that the implications of the robot’s movement aren’t the only important aspect to study; its moments of stillness are also compelling. Gurevich noted, “Once they start playing, the robots’ movements get very controlled and very specific, and when there is a rest, it becomes really dramatic and powerful.” Of course the robots only become very still in those moments if the performer wearing the motion capture suit also becomes still. In one instance, the team noticed that a percussionist was counting the beats of a rest with slight movements of her hands, and the robot several hundred miles away was moving in time with that musician. That moment raised an important question, Gurevich said: “Do we want the performers to just act naturally and not think about the fact that there’s this robot? Or should they act in certain ways to evoke certain responses?”

While an important part of this team’s research is about studying how performers and audiences relate to the robots, Gurevich noted that “the deeper goal is really to help develop perspective and understanding on how performers move.” Schmidt agreed, saying “It’s been so interesting to investigate and try to understand the way musicians communicate non-verbally with their bodies,” and he summed up the project’s goals going forward: “We are hoping to deepen our understanding of what it means to communicate musically from musician to musician as well as from musician to audience.”

Delivering a Digital Experience for the Blind

Obtaining information digitally has long been a fact of life for many people, but several barriers to digital content still exist for those with visual impairments.

With a project that has roots going back many years, two U-M professors have set out to push through those barriers and improve access to digital content for the blind.

Sile O’Modhrain, associate professor and chair of the Department of Performing Arts Technology, and Brent Gillespie, professor of mechanical engineering and robotics, have been working together for decades, going back to their time in graduate school at Stanford University. About 10 years ago, they began seeking funding for a project named Holy Braille: a digital device that will offer a full-page, refreshable, tactile display – something akin to a braille Kindle – to enable blind people to access information digitally using their sense of touch. The technology presents a novel use for haptics, which use vibration or other physical sensations to give the equivalent of a tactile sensation with digital devices.

Current assistive technologies that give blind people access to digital information fall short in many ways and with many types of content. For example, audio tools don’t indicate paragraph headings, indentations, or section breaks, and they can’t adequately convey graphic elements like charts or maps. As a result, many visually impaired people also rely on tactile content like braille or raised maps.

Devices do exist that can work with a tablet to display braille text, but they only display one line of text at a time. While a single-line display may work with some kinds of content, others require multi-line display, including, as O’Modhrain pointed out, computer coding, mathematics, and music. “With piano music, you have the left hand written below the right hand, and for vocal music you’ve got the words above the music,” she said. “So if they’re not spatially organized, you actually lose an awful lot of information.”

Because their access to digital content continues to be limited, many blind people rely, to some extent, on hard-copy braille materials, but these also have limitations. They are bulky and expensive, for instance. O’Modhrain, who is blind, noted that her braille edition of a one-volume cookbook fills 12 volumes. The braille version of The Lord of the Rings is about 20 volumes. And it can cost a manufacturer several dollars per page to produce an embossed braille book.

Perhaps the greatest limitation of hard-copy braille is simply that it isn’t digital. It doesn’t have the immediacy of digital content, and it can’t be updated, modified, or edited by the user. A braille document doesn’t allow for students to add notes or collaborate with others. Braille sheet music poses similar challenges for musicians, as O’Modhrain explained: “If I have a piano lesson, and my teacher wants me to change the fingering of a passage, I can’t just scribble the fingering in with a pencil.”

Finding the Solution to a Complicated Problem

When O’Modhrain and Gillespie first began seeking funding for their project about 10 years ago, they turned to their employer, the University of Michigan. They were among the first to apply for a grant through MCubed, an initiative designed to catalyze innovative research and scholarship with seed funding for interdisciplinary faculty-led teams. They succeeded in obtaining that initial funding and then hired a graduate student, Alex Russomanno, who took on the project for his PhD in mechanical engineering. Together they founded NewHaptics, a start-up led by Russomanno that is building on his PhD research on microfluidic device design, to realize their goal. NewHaptics went on to acquire additional rounds of funding in the form of Small Business Innovation Research (SBIR) grants from the National Science Foundation (NSF) and the National Institutes of Health (NIH).

The goal of NewHaptics is to produce a commercially available full-page digital device with a tactile display, solving a problem other innovators have yet to achieve. Braille text is a series of cells, with each cell containing six dots. The dots are either on or off – raised or flat – in a particular arrangement to represent letters or symbols. The difficulty in creating a digital braille display has to do with the type of mechanism that can create this arrangement of dots in real time. NewHaptics’ solution to this complex problem is to use air or fluid beneath the display to drive bubbles that will push the dots up and down. “And because we did that,” O’Modhrain explained, “we don’t need a huge mechanical thing sitting underneath every dot. We created these series of channels and bubbles where basically we can use circulating air to tell which dots to go up and down.”

A full-page tactile device will transform the digital experience for blind people, not only giving them access to a vast amount of online content, from books to maps to sheet music, but also enabling them to edit, annotate, and interact with that content.

At this point, NewHaptics has developed a prototype with a display that is about a quarter of a page, and O’Modhrain estimates the company is about three years away from the full-page version. Acknowledging the complexity of their mission, she noted, “We’re just taking things piece by piece and then will scale up. But there’s nothing we think we can’t master.”

Using Techno Music to Diagnose Sleep Disorders: A U-M–Mayo Clinic Collaboration

After Kara Dupuy-McCauley graduated from SMTD in 2004 with a BM in music technology and piano performance, she spent a few years as a working musician – touring with her punk band, teaching piano, playing weddings. Then, halfway through a degree in music therapy, she took a biology class that sparked a realization and led to a dramatic shift in direction. She decided to become a doctor, though she was determined to remain involved with music. “Music has been a focus of my entire life,” she said, “so when I made the decision to go to medical school, I always had the thought in the back of my head that it would be really great to bring some sort of musical aspect into my medical career in some way.”

Now a Mayo Clinic pulmonologist with a specialty in sleep medicine, Dupuy-McCauley hit upon a way to do just that during her fellowship at the clinic. While reading sleep studies and noting the waveforms of the resulting data, it occurred to her that incorporating a sound element might make the data easier to interpret. She discussed the idea with her mentor, Dr. Timothy Morgenthaler, and they decided to focus on one aspect of a sleep study: the diagnosis of hypopnea, abnormally slow or shallow breathing during sleep. Dupuy-McCauley then reached out to her friend and former professor – Stephen Rush, professor of music in SMTD’s Department of Performing Arts Technology (PAT).

For Rush, his own sleep study had gotten him thinking. After the study, he asked his doctor if he could see the data readout, and to him, it looked like a mess, like children’s crayon scribbles made during a bumpy road trip. “How do you get any data from that?,” he asked. When he received Dupuy-McCauley’s email, it resonated with him on many levels.

Together, they began to think about the idea of creating techno music incorporating sleep data, what Rush described as a sort of sonic “sleep biography.” The theory was that diagnosticians’ accuracy would improve if patients’ sleep studies were set to music, as the music could help highlight breathing anomalies during sleep, not to mention make the process of analyzing the data more engaging. After 12 years of teaching about the creative process at SMTD, Rush has concluded that, “if you’re having fun, you’re probably paying more attention,” and if they could make techno tracks involving sleep data, he figured, “the technicians will be paying more attention, because it’ll be fun and hip.”

“A Very U-M Collab”: Assembling the Team

Rush thought the project would be perfect for the program Faculty Engineering/Arts Student Teams, or FEAST. In partnership with the Multidisciplinary Design Program, FEAST is a curricular program of ArtsEngine, an interdisciplinary initiative of U-M’s North Campus schools and colleges: A. Alfred Taubman College of Architecture + Urban Planning, Penny W. Stamps School of Art & Design, SMTD, the College of Engineering, and the School of Information. A number of students from several different U-M schools and colleges had soon joined the Sonification of Sleep Data team. Rush also enlisted to the project his longtime friend, Greg Syrjala, a U-M alum who graduated in 1981 with a degree in electrical engineering. Commenting on the makeup of the team, Rush said, “It’s a very U-M collab, frankly.”

Like Dupuy-McCauley, one of the students working on the project was drawn to it in part because of his passion for music. Oliver Zay is pursuing a master’s degree in applied statistics and obtained his undergraduate degree in computer science from U-M’s College of Engineering. He is also a graduate student instructor (GSI) for the Michigan Marching Band. “I love being able to work with the band and continue my passion for music,” he said. This project interested him, he noted, because “I liked the idea of combining different disciplines to approach diagnosing sleep disorders in a new way.”

Identifying the Problem

Fans of techno music understand the central significance of “the drop” – the moment that comes after a buildup of tension in the music, a brief pause, and then, BAM. The beat drops, a heavy bass kicks in, the crowd goes wild. “It’s probably the most important major transition in techno music,” noted Rush. “The drop,” it turns out, also plays an important role in diagnosing sleep disorders, though in that context, it refers to a drop in oxygen levels.

In sleep studies, there are two different types of breathing pauses that cause those drops: apnea, which is at least a 90 percent reduction in airflow, and hypopnea, which is at least a 30 percent reduction in airflow that causes the oxygen to go down. The cause of these breathing pauses is either obstructive, meaning that the airway narrows and even closes off, or central, meaning that the brain is not sending the signal to breathe. Determining the cause is vital to proper diagnosis and treatment, and while that cause is fairly easy to discern with apnea, it’s harder to tell with hypopnea.

Phase one of the project was to use sound – and in fact, music – to improve the accuracy and efficiency of diagnosing instances of hypopnea. Phase two, which is further down the road, is to use sound to distinguish between the two different types of hypopnea.

Combining Disciplines to Reach a Solution

The team decided early in the process that, rather than attempting to create tracks directly from sleep study data, they would use that data to modulate already written music. In other words, the sleep data would be incorporated into existing music tracks and used to, as Syrjala explained, “change or control things like tempo, pitch, volume, frequency range, delay time, etc.” Rush composed a techno piece, and then the team experimented with using the data to modulate the music in various ways. “When the test subject’s oxygen level decreases,” Syrjala said, “high frequency information is either removed or enhanced in the audio, resulting in an immediately noticeable change.” The team then experimented with many different ways to modulate the tracks to determine which method communicated these oxygen-level drops the most clearly. In “Music File 01,” on the team’s website, listeners can distinctly hear the moments when the beat “filters out,” sounding suddenly muffled, at several points (at :11, :24, :44, and so on). Those moments clearly represent, even to the untrained ear, a patient’s instances of hypopnea.

Phase two of the project, according to Dupuy-McCauley, will involve a more in-depth analysis and will probably incorporate additional data streams from sleep studies. She hopes this phase will enable researchers to create what she calls a “sonic phenotype,” or a characteristic sound that will help distinguish between central and obstructive hypopnea.

In addition to the primary goal of helping patients get a proper diagnosis and benefit from better sleep, the team also seeks to bring a new understanding and appreciation of transdisciplinary research, where, Rush noted, “people of differing disciplines come to the table bringing their discipline and at the same time leaving it at the door, saying, you know, I’m open to anything.” For Oliver Zay, the combination of disciplines greatly enriched the experience of working on the team. “We all had wildly different backgrounds (computer science, architecture, music production),” he noted, “but we found our own ways to put our stamp on the project. I truly think that having such a diverse array of perspectives and experiences on the team made the project better, and also a lot of fun.”

Dupuy-McCauley described the project as a “really cool multidisciplinary marriage of music and technology and medicine,” one that she hopes will be a model for other researchers. “Back in the day of Da Vinci, art and science were one,” she said, “and over the centuries they’ve become very siloed, and there isn’t a lot of overlap. Maybe some of the big problems people are trying to solve in medicine could be solved by approaching things from a more artistic and human perspective rather than just relying on hard science.”